Issues in Tech:

Complexity

January the 18th in year 2015

It's the 4th week I'm here at Dev Bootcamp Phase0. Actually it's the sixth, because we had 2 weeks off for Christmas, but what I'm to write about today is something that I believe all new programmers encounter when learning to program. It's the growing complexity of our programs. Each week is tougher and challenges get more and more difficult and algorithms are more and more complex. And when I was doing my research about today's tech world problems I stumbled on an interesting issue it faces. The complexity.

It's kind of funny that a learner programmer is talking about super complexity as the problem, when I find most basic programs difficult. But I'm won't go into technicalities modern world faces, but I'll try to look at it from a wider point of view.

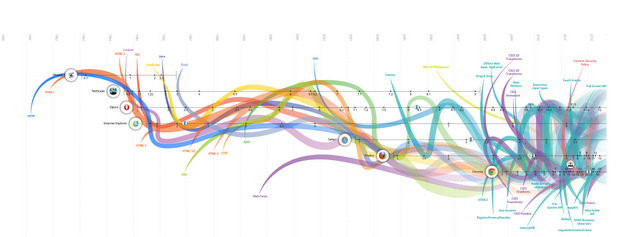

How have I got to this idea in the first place? Let's see. It all comes down to scale. When I study the language, I get to practice it more and more, and I get better and better at it. This is the small scale. But when the world learns a new technology it goes through the same process. Let's take an example. The internet. World wide web was discovered in early 90s, and at the time it was just HTML, and that is it. Just think of what is used to make a good page today, from CSS, to JavaScript, to JSON, to Ruby, to PHP, to SQL, to XML, to Flash, and the list goes on and on. With every one of them options are added and complexity increases.

You could look at other technologies, and see the same result. The computers are so complex today that we can't even imagine how they are made. Microprocessors are so small, that a machine creating it must be mounted on springs to cancel out any earth vibration that might ruin the process. And it doesn't apply just to the electronics, it as well works with the mechanics. Just look at a pocket watch people used to wear. Can you even imagine the fine mechanics needed to build one of them. It's beyond understandings. But they didn't start that way. First they were huge clocks often on city hall tower, and computers in the 60s were the size of a room. So just like me, who is starting with basic clumsy code, and facing tougher more complex problems, so is the world. And where is this taking us?

Let's take a look at some of the world's most complex. Lockheed Martin is developing an F-35 joint strike fighter airplane that is suppose to be superior to any other on the planet, but some experienced aircraft engineers are being critical about it. They say it doesn't have the manoeuvrability and is being too fragile, and especially regarding it's cost (when it will be out of service in 2065 estimated cost of the project is over 1 trillion dollars), but the government is supporting it and says that it's the software where this plane shines. But why is it still not delivered, and delivery date keeps being postponed? This plane's software is supposed to have millions lines of code! I can't say how much it is because it's beyond of my understanding, but I'm positive that this is a lot. And this is suppose to be the reason for it's delay. Now, here's the question. Is it too complex? Is it beyond our capability? Are we unable to do this yet? Will we ever be able to? It all remains to be seen. Current date of delivery is December 2015.

Another thing to point out is again related to planes. It's the Traffic Collision Avoidance System or TCAS for short. This system is mandatory for planes that are above certain weight, and what it does it alerts the pilot of possible collision with a plane using the same system. The calculations there are so absurd that it's complexity is understood only by a handful of people. And this got me thinking.

Let's imagine that humanity develop a robot like we know from the movies, and it's software is so complex that only two people understand it, and can actually make some deep code changes. Remember that the more complex the code is, the more fragile it is. And then a tragedy happens and these two people die in an accident, and there's nobody left who understands it. What would happen?

This is an expensive project, so you can't shut it down, but nobody knows the code to manage it. You build plenty of robots, because it's good money. By this time mass production is all robotics, as it already is today. And then a bug appears, that makes the robots aggressive in certain situations, and there's nobody to fix them. What would happen? I don't know, but it sure sounds like The Matrix prequel. It might sound weird but SciFi today can be reality tomorrow. And just to confirm that I'm not completely talking BS, I want to mention that there is a very important man who said that AI is the greatest danger humanity faces today. This man is Elon Musk.